ex1.m

Part 1: Basic Function

-

Modify warmUpExercise.m to return a 5 x 5 identity matrix

1 | A = eye(5); |

Part 2: Plotting

-

Plot the training data into a figure in plotData.m

1 | data = load('ex1data1.txt') |

Part 3: Cost and Gradient descent

-

complete the code in the file computeCost.m, which is a function that computes J(θ)

1 | J = sum(((X * theta) - y).^2) / (2 * m); |

-

Perform a single gradient step on the parameter vector theta in gradientDescent.m

1 | for iter = 1:num_iters |

ex2.m

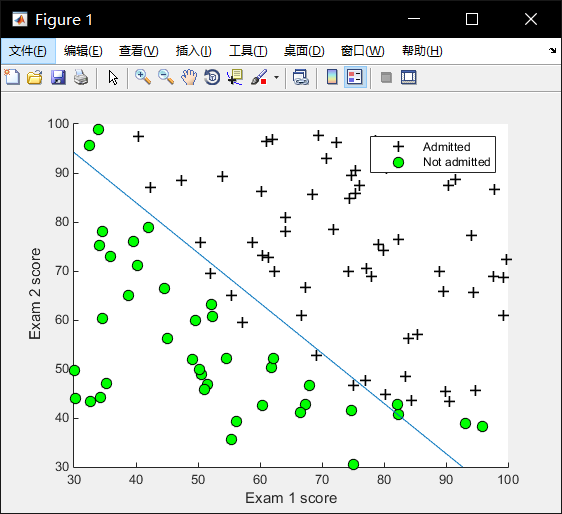

Part 1: Plotting

-

Plot the positive and negative examples on a 2D plot, using the option ‘k+’ for the positive examples and ‘ko’ for the negative examples

1 | positive = find(y == 1); negative = find(y == 0); |

Part 2: Compute Cost and Gradient

-

Compute the sigmoid of each value of z (z can be a matrix, vector or scalar)

1 | % Y = exp(X) 为数组 X 中的每个元素返回指数 e^x |

-

Compute the cost of a particular choice of theta. You should set J to the cost

1 | J = (1 / m) * sum( |

-

Compute the partial derivatives and set grad to the partial derivatives of the cost w.r.t. each parameter in theta

1 | for j = 1:size(theta) |

Part 3: Optimizing using fminunc

Part 4: Predict and Accuracies

-

Complete the following code to make predictions using your learned logistic regression parameters. You should set p to a vector of 0’s and 1’s

1 | p = sigmoid(X * theta) >= 0.5; |

ex2_reg.m

Part 1: Regularized Logistic Regression

-

Compute the cost of a particular choice of theta. You should set J to the cost

- tip: In Octave/MATLAB, recall that indexing starts from 1, hence, you should not be regularizing the theta(1) parameter (which corresponds to θ0) in the code

1 | J = (1 / m) * sum(-y .* log(sigmoid(X * theta)) - (1 - y) .* log(1 - sigmoid(X * theta))) + (lambda / (2 * m)) * sum(theta(2:size(theta)) .^2); |

-

Compute the partial derivatives and set grad to the partial derivatives of the cost w.r.t. each parameter in theta

1 | grad(1) = sum((sigmoid(X * theta) - y) .* X(:, 1)) / m; |